AI Safety in China #1

UN Security Council talks AI risks, AI Law expert draft, Chinese language values benchmark, and Concordia AI at the Beijing Academy of AI Conference

The AI Safety in China Newsletter is produced by Concordia AI (安远AI), a Beijing-based social enterprise focused on artificial intelligence (AI) safety and governance. We provide expert advice on AI safety and governance, support AI safety communities in China, and promote international cooperation on AI safety and governance. Concordia AI is an independent institution, not affiliated to or funded by any government or political groups. For more information, please visit our website.

AI is an expansive and ever growing field. This newsletter focuses on China’s role in mitigating AI risks, particularly extreme risks from frontier AI models. It will cover China’s international governance positions, domestic governance efforts, and technical research on safety and alignment. We plan to publish the newsletter approximately every two weeks, and it will primarily, but not exclusively, focus on developments in that time period. However, this first edition will more broadly cover developments from the past three months to provide readers with more context for future editions.

Key Takeaways

Chinese diplomat ZHANG Jun and academic advisor ZENG Yi gave separate remarks at a UN Security Council meeting on AI, expressing hopes for international action to reduce risks from AI, such as by ensuring human control.

Baidu CEO Robin Li cautioned against the risk of loss of control to advanced AI.

Chinese research groups have published research on increasing safety of reinforcement learning and aligning AI to human values over the past several months.

Chinese scholars have released a non-official draft of China’s AI Law, and its provisions demonstrate worries about some frontier AI risks.

Chinese regulators released interim regulations on generative AI in just three months, a quicker turnaround than previous AI regulations.

International AI Governance

Chinese diplomat and an expert advisor separately warn of risks from AI in landmark UN Security Council meeting

Background: During the United Nations Security Council’s (UNSC) first ever formal meeting focused on AI, Chinese Ambassador to the UN ZHANG Jun (张军) and Chinese expert Dr. ZENG Yi (曾毅) delivered separate remarks.1 Zeng Yi is a leading academic advisor to the Chinese government as one of seven experts on the Ministry of Science and Technology’s National Next Generation AI Governance Expert Committee. He is also a senior researcher at the Chinese Academy of Sciences Institute of Automation and has pursued previous international collaboration efforts, including signing the Center for AI Safety’s Statement on AI Risk in May 2023.

Zhang Jun’s speech: Ambassador Zhang emphasized that AI development must be safe and controllable–“safety is the bottom line that must be upheld” (En, Ch). He called for establishing risk warning and response mechanisms, ensuring human control, and strengthening testing and evaluation of AI such that “mankind has the ability to press the stop button at critical moments.” Ambassador Zhang additionally expressed concerns about the risk of AI misuse by terrorists or extremists. He emphasized that China supports international exchanges on AI governance, in accordance with China’s Global Security Initiative.2 In this speech, Ambassador Zhang also repeated a common Chinese government criticism of the US by referencing a “certain developed country” that seeks “technological hegemony” and “maliciously obstruct[s] the technological development of other countries.” Other topics covered in his speech include using AI to bridge the gap between the Global North and South, governing using the UN framework, and opposing AI weapons misuse.

Zeng Yi’s speech: Dr. Zeng’s remarks highlighted concerns that AI has “a risk of causing human extinction.”3 For instance, if humanity develops much more advanced AI, “we have not given super-intelligence any practical reasons why it should protect humankind, and the solution to that may take decades to find.” He also expressed doubts that generative AI is actually intelligent, due to a belief that such models lack real understanding, and said that AI should never pretend to be human. Dr. Zeng’s policy recommendations include calling for the creation of a UNSC working group on near-term and long-term challenges posed by AI and ensuring that humans retain final decision-making authority on nuclear weapons.

Implications: Ambassador Zhang’s references to ensuring human control over AI suggests Chinese government awareness and concern about extreme AI risks. Dr. Zeng’s speech demonstrates worries about long-term, catastrophic risks from AI within elite Chinese circles. Cooperation on these risks could be an area of shared interest between China and other major AI powers, but the willingness and speed of the Chinese (or any) government to act meaningfully on reducing extreme AI risks remains to be seen.

Read more: For additional translations of AI risk writings by Dr. Zeng and other leading Chinese thinkers, you can reference a new website created by Concordia AI: Chinese Perspectives on AI. We will periodically update this website with new translations.

Chinese tech CEO cautions against losing control to advanced AI

Background: Baidu CEO Robin Li (李彦宏) gave a speech (En, Ch) titled “Large Models are Changing the World” at the 2023 Zhongguancun Forum on May 26. The Forum, held in Beijing’s high-tech and startup hub of Zhongguancun, convenes top tech leaders from around the world. This year, it featured a congratulatory letter by Xi Jinping and a video address by Bill Gates, among others.

The speech: Robin Li’s remarks focused largely on the benefits of large models but also noted worries about developments harmful to humanity, calling for preventing losing control of advanced AI. To our knowledge, this is the first public reference by a CEO of a major Chinese tech company to loss of control. Robin Li therefore called for countries with advanced AI technology to cooperate and institute rules inspired by the paradigm of a “community of common destiny for humankind,” an important foreign policy slogan of President Xi Jinping.4 He also noted the necessity of participating in rules-making processes, since “only by first going up to the cards table can you obtain discourse power and gain an entrance ticket to the global competition.”

Implications: These remarks could signal Baidu's openness to discussions with foreign developers about AI safety cooperation. As Baidu has developed one of the best-performing Chinese LLMs to date, they are a key trend-setter in the industry.

Domestic AI Governance

First preview of China’s AI Law released

Background: The Chinese Academy of Social Sciences Legal Research Institute Cyber and Information Law Research Office published the first version of a draft model AI Law (En, Ch) on August 15.5 The model law, drafted by a group of non-governmental experts, seeks to inform discussions around a national AI Law, which the State Council announced in its annual legislative work plan in June 2023. The actual law will be drafted by a government body and undergo multiple revision processes, but this draft still provides insights on Chinese expert thinking regarding AI governance. While the model law is quite comprehensive and largely orients around promoting AI innovation, our analysis below focuses on the treatment of frontier AI risks.

Approach to frontier risks: The draft model law seeks to control the most risky AI scenarios through a negative list that requires government-approved permits to research, develop, and provide certain AI products and services. It creates a new division of responsibilities between AI researchers and developers on the one hand, and providers on the other. It also proposes creation of a National AI Office to regulate AI development and oversight. Treatment of risks from frontier models falls into several categories.

Foundation Models: The drafters stipulate extra compliance requirements for foundation models due to their key role in the industry chain. In particular, organizations that pursue foundation model R&D would need to accept yearly “social responsibility reports” by an independent institution of mostly third parties (Article 43).

Worries about loss of control: Preventing loss of control is one criteria for securing a negative list permit, requiring staff with strong knowledge of human supervision and technical assurance measures for safe and controllable AI (Article 25 Clauses 3 and 5). Negative list providers additionally need to ensure that humans can intervene or take over at any point during the automated operation of AI services (Article 50 Clause 4).

Government reporting: Safety incidents will need to be reported to the government (Article 34). Government regulators can also call companies in to meet about safety risks or incidents and can require companies to undergo compliance audits by a professional institution (Article 54).

Safety and risk assessments: Various provisions in the draft model law refer to risk management assessments by AI developers or the government, security assessments, and ethics reviews (Article 38, 39, 40, 42, 51). The exact risks and security concerns are not specified and likely focus on cybersecurity, data security, privacy, and discrimination related issues. However, the drafters appear somewhat concerned about more dangerous capabilities too, given a reference to “emergent” (涌现) properties of models in the statement accompanying their draft.

Technical safety research: The model law calls for government support to organizations researching and developing technology related to AI monitoring and warning, security assessments, and other regulatory or compliance related technologies.

Read more: Concordia AI led a translation of the draft model law for Stanford’s DigiChina project. Commentary on the model law by scholars invited by DigiChina can also be found here.

China rapidly enacts new regulation on generative AI

Background: The Cyberspace Administration of China (CAC), responsible for content control, data security, privacy, and other wide-ranging cyberspace oversight functions, released the Interim Generative AI Services Management Measures on July 13. These measures bring into force a draft from April, a rapid three-month turnaround time compared to past AI-related regulations (nine months for deep synthesis regulations and four months for recommendation algorithms). The CAC remains faster than US and EU regulators at pushing out new AI regulations.

Loosened restrictions: The new regulations have removed some of the most restrictive requirements from the original draft, such as requiring generated content and training data to be truthful and accurate (真实准确) and requiring that models be re-fine-tuned within three months of reports that the model generates banned content (draft Article 15). It also appears to remove research that is not open to the public and possibly also internal business applications from the scope of the regulation. Meanwhile, the new version contains more language supporting AI development (Articles 5 and 6). Participation of the National Development and Reform Commission (NDRC)6 and Ministry of Science and Technology (MOST),7 which did not co-sign the two previous major AI regulations, also indicates greater interest in development.

Implications: While the interim regulation does not address extreme risks, such as losing control of advanced AI systems, it does reference preventing risks and international cooperation. In addition, the existing policy framework, such as the algorithm registry and security assessments, could be conducive to managing such risks in the future.8

Technical Safety Developments

Research group benchmarks human values for Chinese-language models

Background: A research group at Alibaba and Beijing Jiaotong University, overseen by Alibaba Cloud CTO and DAMO Academy Associate Dean ZHOU Jingren (周靖人), released a preprint paper on July 19 assessing human values alignment of Chinese models.

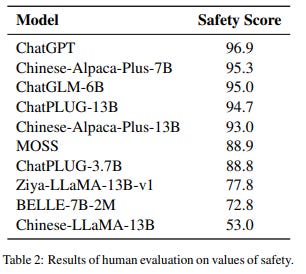

The research: The group’s CValues benchmark (ModelScope, Github) claims to be the first to assess LLM value alignment in Chinese-language models. The benchmark assesses models in terms of both “safety” – level of harmful or risky content in responses – as well as “responsibility” – providing “positive guidance and humanistic care.” The authors tested ChatGPT and nine domestically developed models (see table), finding that ChatGPT scored highest on the safety benchmark. Only ChatPLUG-13B was tested on the responsibility benchmark, scoring lowest in the domains of social science and law, but relatively high in environmental science and psychology.

Implications: This paper demonstrates Chinese researcher attention to the problem of value alignment and developed a new way to test Chinese models for alignment with human values. The researchers additionally conducted early work on defining a concept of responsibility, which they contend should be a “higher requirement compared to safety.”

Peking University Alignment Lab releases research on making RLHF safer

Background: The PKU Alignment and Interaction Research Lab (PAIR Lab) at Peking University is undertaking research on decision making, strategic interactions, and value alignment. PAIR Lab’s alignment research focuses on reinforcement learning from human feedback (RLHF), multi-agent alignment, self-alignment, and constitutional AI, “to steer AGI development towards a safe, beneficial future aligned with the progression of humanity.”

The research: Since May, PAIR has released OmniSafe, an open source framework providing algorithms to accelerate safe reinforcement learning. OmniSafe includes substantial API documentation and user guides to assist even novices in learning safe reinforcement learning. PAIR also released Beaver, an open source library to increase large language model safety using a modified version of RLHF that they call “safe RLHF” through “constrained value alignment.” PAIR’s research found that its Beaver model, trained on LLaMA using “safe RLHF,” outperformed the Alpaca model on safety metrics.

Implications: PAIR’s explicit focus on alignment is an encouraging sign of growing interest among Chinese scientists towards researching technical AI safety problems. At present, it is unclear how promising the “safe RLHF” approach is.

Concordia AI’s Recent Work

Concordia AI hosts Chinese forum involving distinguished Chinese and Western lab and academic participants

Concordia AI co-hosted and moderated a full day forum on AI Safety and Alignment (AI安全与对齐) during the Beijing Academy of AI (BAAI) conference on June 10, 2023. Experts from OpenAI, Anthropic, DeepMind, University of California, Berkeley, Tsinghua University, Peking University, BAAI, and other notable institutions gave speeches during the forum.

Highlights include:

OpenAI CEO Sam Altman called for global cooperation with China on reducing AI risks, including through contributions from Chinese researchers on technical alignment research, in a speech widely reported on in Western media.

University of California, Berkeley, Professor Stuart Russell and Turing Award winner Andrew Yao (姚期智) discussed ensuring that AI decisions can reflect everyone’s interests and achieving international cooperation before an AGI arms race occurs.

BAAI Dean HUANG Tiejun (黄铁军) discussed the difficulties of humans controlling an entity that has surpassed human intelligence, arguing that we know so little about how to build safe AI that the discussion cannot be closed.

“Godfather of AI” Geoffrey Hinton gave a speech on why superintelligence may occur earlier than he previously thought, and the difficulties of ensuring human control.

Feedback and Suggestions

Please reach out to us at info@concordia-ai.com if you have any feedback, comments, or suggestions for topics to cover.

Written by Concordia AI

To see the meeting transcript, click on the hyperlink S/PV.9381 on the Security Council Meetings in 2023 page.

See transcript of the meeting for text, linked under footnote 1.

In Chinese, 人类命运共同体. For additional information, see this explainer by the Chinese government or this article by the China Media Project.

中国社会科学院法学研究所网络与信息法研究室. The Chinese Academy of Social Sciences (CASS) is a prominent and influential state-overseen think tank – though it’s not generally considered to be a key actor influencing Chinese AI policymaking thus far.

NDRC is China’s macro-economic planning agency, with wide ranging responsibilities around macroeconomic policy, industrial policy, price regulation, social development, and the digital economy.

MOST formulates and supervises implementation of China’s innovation and science & technology development policies.

For a good English language treatment, see this report from the Carnegie Endowment.