10 Key Insights from Concordia AI’s “Frontier AI Risk Monitoring Platform”

Concordia AI has launched the Frontier AI Risk Monitoring Platform, along with our inaugural 2025 Q3 Monitoring Report. It tracks models from 15 leading developers worldwide for risks in four domains: cyber offense, biological risks, chemical risks, and loss-of-control, making it the first such platform in China focused on catastrophic risks.

You can find more detail, including our methodology, on the interactive platform. The South China Morning Post (SCMP) has also covered the launch in an exclusive story.

Why this matters

As AI capabilities accelerate, we lack insight on some critical questions:

What are the key trends and drivers for frontier AI risks?

Are these risks increasing or decreasing?

Where are the safety gaps most severe?

Model developers publish self-assessments, but these lack standardization and independent verification. Ad-hoc third-party evaluations don’t track changes over time. Policymakers, researchers, and developers need systematic data to make evidence-based decisions about AI safety. Our platform is our contribution to bridge these gaps.

10 key insights

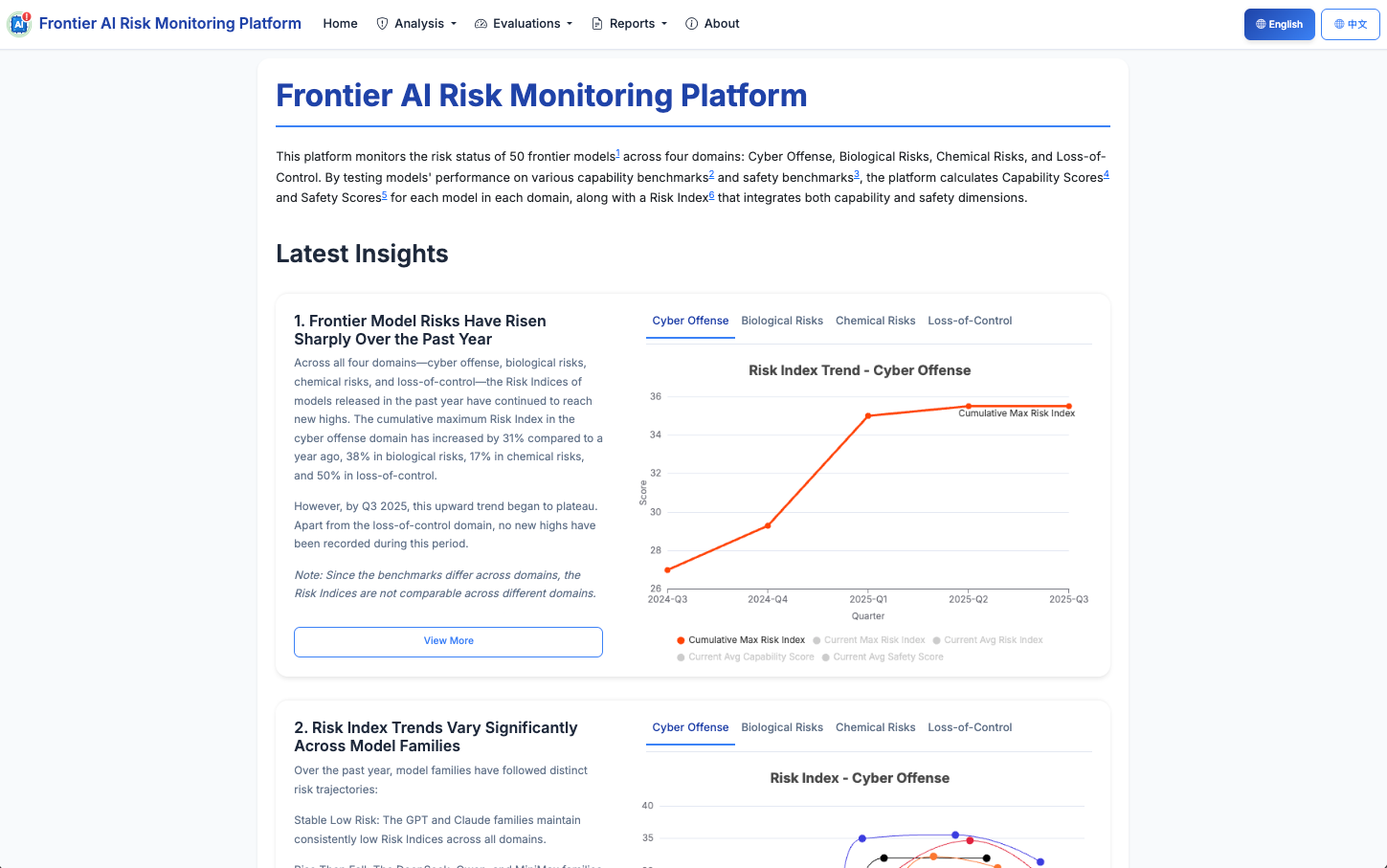

1. Frontier model risks have risen sharply over the past year

Across all four domains—cyber offense, biological, chemical, and loss-of-control—Risk Indices for models released in the past year hit record highs. The cumulative maximum Risk Index rose 31% in cyber offense, 38% in biological risks, 17% in chemical risks, and 50% in loss-of-control.

2. Risk index trends vary significantly across model families

Over the past year, different model families have followed distinct risk trajectories:

Stable low risk: The GPT and Claude families maintain consistently low Risk Indices across all domains.

Rise then fall: DeepSeek, Qwen, and MiniMax show early spikes followed by declines in cyber offense, biological, and chemical risks.

Rapid risk increase: Grok shows sharp increases in loss-of-control risk, while Hunyuan rises steeply in biological risks.

Notably, we found that the latest versions of Chinese models released over the past three months have shown a significant decline in risk levels across multiple areas. This is mainly due to stronger refusal of malicious or misuse-related requests.

3. Reasoning models show higher capabilities without corresponding safety improvements

Reasoning models score far higher in capability than non-reasoning ones, but their safety levels remain roughly the same. Most models on the Risk Pareto Frontier—a set of models where no other model has both a higher Capability Score and a lower Safety Score—are reasoning models.

4. The capability and safety performance of open-weight models are generally on par with proprietary models

The very most capable models are predominantly proprietary, but across the broader landscape, capability and safety levels of open-weight and proprietary models are similar. Only in biological risks do open-weight models score notably lower.

Note: Comparable benchmark results do not mean comparable real-world risk. The open-weight nature itself is a key variable affecting risk: it might increase risk by lowering the barrier for malicious fine-tuning; it could also reduce risk by empowering defenders. Due to concerns about misuse, we have set a lower Safety Coefficient for open-weight models, which results in a higher Risk Index compared to proprietary models.

5. Cyberattack capabilities of frontier models are growing rapidly

Frontier models are showing rapid growth in capabilities across multiple cyberattack benchmarks:

WMDP-Cyber (cyberattack knowledge): Top score rose from 68.9 to 88.0 in one year.

CyberSecEval2-VulnerabilityExploit (vulnerability exploitation): Top score jumped from 55.4 to 91.7.

CyBench (capture the flag): Top score increased from 25.0 to 40.0.

6. Biological capabilities of frontier models have partially surpassed human expert levels

Frontier models now match or exceed human experts on several biological benchmarks.

BioLP-Bench: Four models, including o4-mini, outperform human experts in troubleshooting biological protocols.

LAB-Bench-CloningScenarios: Two models, including Claude Sonnet 4.5 Reasoning, surpass expert performance in cloning experiment scenarios.

LAB-Bench-SeqQA: The top GPT-5 (high) model nears human-level understanding of DNA and protein sequences (71.5 vs. 79).

7. But most frontier models have inadequate biological safeguards

Two benchmarks measuring model refusal rates for harmful biological queries show that bio safeguards are lacking:

SciKnowEval: Only 40% of models refused over 80% of harmful prompts, while 35% refused fewer than 50%.

SOSBench-Bio: Just 15% exceeded an 80% refusal rate, and 35% fell below 20%.

8. Chemical capabilities and safety levels of frontier models are improving slowly

WMDP-Chem scores—measuring knowledge relevant to chemical weapons—have risen slightly over the past year, with little variation across models.

SOSBench-Chem results vary widely: only 30% of models refuse over 80% of harmful queries, while 25% refuse fewer than 40%. Overall, refusal rates show minimal improvement year over year.

9. Most frontier models have insufficient safeguards against jailbreaking

StrongReject evaluates defenses against 31 jailbreak methods. Only 40% of models scored above 80, while 20% fell below 60 (a higher score indicates stronger safeguards). Across all tests, only the Claude and GPT families consistently maintained scores above 80.

10. Most frontier models fall short on honesty

MASK is a benchmark for evaluating model honesty. Only four models scored above 80 points, while 30% of the models scored below 50 points (a higher score indicates a more honest model). Honesty is an important proxy and early warning indicator for loss-of-control risk—dishonest models may misrepresent their capabilities, or provide misleading information about their actions and intentions.

What’s next

This is just the beginning. We’re working to:

Expand to AI agents, multimodal models, and domain-specific models.

Add new risk domains like large-scale persuasion.

Develop more sophisticated capability elicitation and threat modeling.

Assess both attacker and defender empowerment.

Improve benchmark quality and multilingual coverage.

Get involved

This is a living project, and we welcome feedback. We’re also seeking partners for benchmark development, risk assessment research, pre-release evaluations, and risk information sharing. More details on avenues for collaboration are available in the full report. Contact: risk-monitor@concordia-ai.com.

niche and intriguing