AI Safety in China #18

China-US agreement on AI in nuclear weapons, China on AI governance at the G20 and UN, industry alliance safety testing, and papers on mechanistic interpretability and AI x chemical safety

Key Takeaways

China and the US jointly affirmed the need for human control over nuclear weapons decisions, the most significant output from intergovernmental talks on AI safety.

Top Chinese officials continue to prioritize AI governance in global forums, with references in President Xi’s G20 speech and the creation of a “Group of Friends” for AI capacity-building.

The AI Industry Alliance of China held a seminar on agent governance, and an article in the Communist Party of China’s flagship newspaper had substantial discussion of AI agent risks.

Recent technical papers have covered topics including benchmarking LLM safety in the chemical domain, examining attention heads in LLM safety, and testing capability of LLMs to self-replicate.

International AI Governance

China and US reach new agreement on AI in nuclear systems

Background: On November 16, Chinese President Xi Jinping and US President Joe Biden met (Ch, US) on the sidelines of the Asia-Pacific Economic Cooperation (APEC) summit in Lima, Peru. Their previous in-person meeting in San Francisco at APEC 2023 resulted in the creation of the China-US intergovernmental AI dialogue.

Key developments on AI safety: The leaders reached a new agreement on AI and nuclear weapons, with both sides affirming the need to maintain human control over decisions to use nuclear weapons in the first such joint China-US statement. US National Security Advisor Jake Sullivan stated that this constitutes a “foundation for [the US and China] being able to work on nuclear risk reduction together … and work on AI safety and risk together.” The leaders also discussed co-sponsorship of both of their UN General Assembly resolutions on AI, the need to address AI risks and improve AI safety, and promoting AI for good.

Implications: This agreement represents a substantial and concrete output from the China-US intergovernmental talks on AI. It remains to be seen whether the talks will continue under a new US administration, but this agreement likely signifies that the door to further dialogue remains open from China’s perspective.

Chinese President Xi raises AI governance again at G20

Background: On November 18, Chinese President Xi Jinping delivered a speech (Ch, En) at the 19th G20 Leaders’ Summit in Rio de Janeiro on the reform of global governance institutions.

Discussion of AI governance in the speech: President Xi’s speech covered five key areas of global governance reform, the fourth of which was digital governance. In the section on digital governance, Xi emphasized three main aspects of AI governance:

The need to strengthen international cooperation on AI governance and ensure AI benefits all of humanity.

China's efforts to promote international AI governance, including through the 2024 World AI Conference (WAIC) and High-Level Meeting on Global AI Governance, the Shanghai Declaration on Global AI Governance, and a UN General Assembly resolution on AI capacity building.

Inviting G20 members to participate in WAIC 2025.

Implications: This speech shows that AI is among the top international governance issues in the minds of Chinese policymakers. It is noteworthy that over half of Xi’s remarks on digital governance focused on AI, also reflecting high prioritization of AI among other digital issues.

China continues pursuing cooperation on AI development and safety through the UN

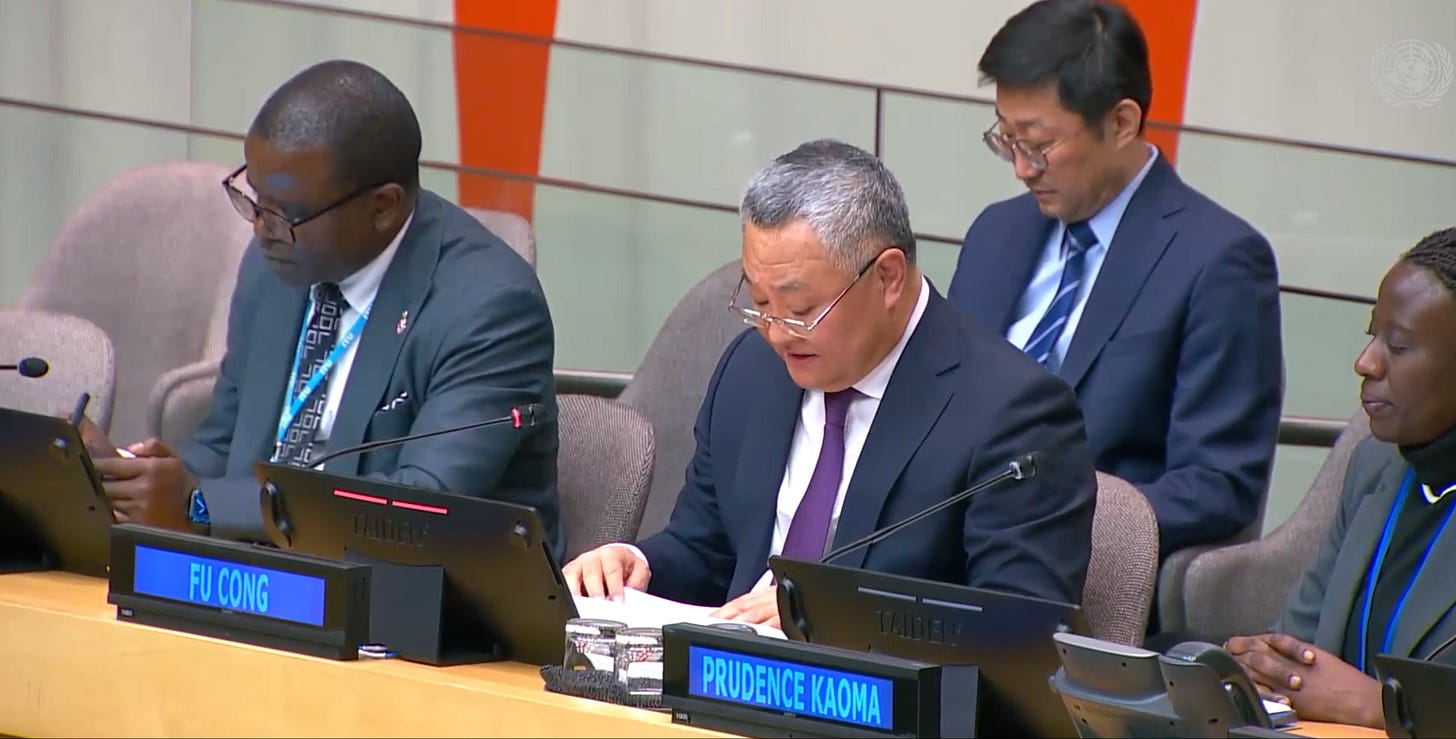

Background: On October 21, Chinese Ambassador to the UN FU Cong (傅聪) gave remarks at a UN Security Council briefing on “Anticipating the Impact of Scientific Developments on International Peace and Security,” focusing most strongly on the effects of AI. On December 5, China and Zambia co-hosted the inaugural meeting of the “Group of Friends for International Cooperation on AI Capacity-building” at UN headquarters in New York.

Fu’s remarks: Fu emphasized three key principles for technology governance: 1) technology development must follow ethical norms and benefit all countries, with the UN as a main platform for global governance 2) bridging the digital divide is crucial for both development and safety risks, referencing China’s UNGA resolution on AI capacity building 3) safety and control is a “bottom-line requirement” for technology development, and AI “must be kept under human control at all times.” Fu additionally criticized “small yard and high fence” policies (a reference to US export controls) and argued that this might plunge the world into confrontation.

Capacity-building group: The first meeting of the Group of Friends included addresses from Chinese Ambassador to the UN FU Cong and Zambia Ministry of Finance and National Planning Permanent Secretary Prudence Kaoma, with participants from over 80 countries. Fu discussed China’s Global AI Governance Initiative and opportunities for partnerships around capacity-building, while Kaoma called for supporting the UN’s role in global governance.

Implications: China continues to focus on AI capacity building and bridging the digital divide to increase cooperation with other developing countries and developing country representation in AI governance. The new “Group of Friends” could become an important venue for addressing developing countries’ digital needs. Simultaneously, China continues to reinforce the need for ensuring that AI systems are under human control.

Domestic AI Governance

Chinese industry alliance shares updates on AI safety testing, including new work on agents

Background: The AI Industry Alliance of China (AIIA) and China Academy of Information and Communications Technology (CAICT), a think tank under the Ministry of Industry and Information Technology (MIIT), held a workshop on AI agent safety on October 29. They also shared updates on their AI Safety Benchmark’s results in Q3 on October 21 and are in the process of conducting additional testing in Q4.

AI agent safety testing: The symposium, organized by the AIIA Safety and Security Governance Committee, addressed growing concerns about potential risks of AI agents, including generating malicious content and launching cyber attacks. CAICT discussed standardization work on AI agent safety, looking at safety definitions and goals in five key AI agent modules: perception, memory, planning, tools, and behavior. AIIA announced plans to write norms on AI agent safety assessment and develop benchmarks for agent safety.

AI Safety Benchmark: Q3 of the AI Safety Benchmark focused on multimodal LLM safety testing for both text-to-image and image-to-text dimensions. The testing found high variation in safety of text-to-image models. For image-to-text, it noted significant content security issues and relatively large risks of violating laws and “AI consciousness” (e.g. anti-humanity inclinations). Concordia AI previously analyzed the creation of the AI Safety Benchmark in Issue #14.

Implications: CAICT’s agent safety workshop shows that Chinese institutions are following and rapidly iterating upon AI safety developments and trends globally. CAICT’s eventual standards may focus more on ensuring reliable behavior of agents in industry applications, but wider discussions on agent safety in China are bringing this important frontier topic to light.

CAICT report includes extensive discussion of safety and governance

Background: In December 2024, CAICT released a “blue paper” AI Development Report, providing a comprehensive overview of AI trends, with AI safety and governance as one of the three core thematic sections.

AI safety discussion: The report divides AI risks into four different levels, from risks affecting humanity (controllability, sustainability), affecting nations and societies (politics, culture, economics, military, cyberspace), affecting individuals and organizations (privacy, ethics), and down to affecting technical systems (data, models, compute). In discussing humanity-level risks, it argues that in the future, frontier AI models could potentially create uncontrollable and extreme risks in the chemical, biological, radiological, or nuclear domains. The report asserts that AI safety and governance is a question that must be answered by all of humanity. It calls for accelerating work on AI safety risk identification methods, strengthening risk assessment and prevention, improving technical governance, and increasing international cooperation.

Implications: CAICT researchers voiced similar concerns in a report in November 2023 covered in Issue #6. The latest report shows that there is consistent attention to the frontier safety risks, and it is interesting that a report on “AI development” would devote this much attention to AI safety and governance.

Technical Safety Developments

On the Role of Attention Heads in Large Language Model Safety.

October 17: This preprint was published by researchers from Alibaba Group, University of Science and Technology of China, Nanyang Technological University, and Tsinghua University. The anchor authors included LI Yongbin (李永彬) in the Alibaba Tongyi lab (阿里巴巴通义实验室). The paper attempts to contribute to the mechanistic interpretability literature by analyzing the effects on model safety of attention heads, which are specialized components in AI language models that help the system focus on and process relationships between different parts of text. The authors develop two metrics for understanding how attention heads contribute to model safety: the Safety Head ImPortant Score (Ships) metric for evaluating individual attention heads’ safety impact, and the Safety Attention Head AttRibution Algorithm (Sahara) for identifying groups of safety-critical attention heads. The research demonstrates that disabling even a single safety attention head could make aligned models like Llama-2-7b-chat 16 times more likely to respond to harmful queries.

ChemSafetyBench: Benchmarking LLM Safety on Chemistry Domain

November 23: This preprint was published by researchers from Yale, Peking University, and other institutions. One of the paper’s anchor authors was Mark Gerstein from Yale, whose previous work was discussed in Issue #11, and six authors from four Chinese universities contributed. ChemSafetyBench consists of over 30,000 entries to evaluate LLM safety with regard to information about chemistry. The benchmark assesses three key tasks: querying chemical properties, evaluating chemical usage legality, and describing synthesis methods. The benchmark also includes jailbreak methods, such as using scientific names of compounds rather than chemical names.

Frontier AI systems have surpassed the self-replicating red line

December 9: This preprint was published by researchers from Fudan University, anchored by Fudan School of Computer Science Dean YANG Min (杨珉). It finds that Meta’s Llama31-70B-Instruct and Alibaba's Qwen25-72B-Instruct LLMs are able to autonomously self-replicate without human assistance in a majority of experimental trials. During the self-replication process, the AI systems demonstrated sufficient self-perception, situational awareness, and problem-solving skills to dynamically overcome obstacles. The researchers warn that self-replicating AI could lead to uncontrolled proliferation of AI systems that eventually overpower human control. They urge immediate international collaboration to study this AI safety risk and develop governance frameworks to mitigate the dangers of self-replicating AI.

New survey papers tackle interpretability and coding LLM security

December 3, 2024: Explainable and Interpretable Multimodal Large Language Models: A Comprehensive Survey. This paper is authored by researchers from Hong Kong University of Science and Technology (HKUST), HKUST-Guangzhou, Shanghai AI Lab (SHLAB), Nanyang Technological University, and Renmin University of China. Anchor authors include HKUST-Guangzhou Vice President and Head of the AI Thrust XIONG Hui, and SHLAB’s SHAO Jing (邵婧). The survey examines interpretability and explainability of multimodal LLMs, analyzed across three main dimensions: data, model architecture, and training/inference methods. The paper analyzes effectiveness of interpretability approaches from token-level to embedding-level representations, and explores strategies to enhance transparency.

November 12, 2024: A Survey on Adversarial Machine Learning for Code Data: Realistic Threats, Countermeasures, and Interpretations. This paper, authored by Xi’an Jiaotong University researchers and anchored by GUAN Xiaohong (管晓宏), reviews the safety of code language models (CLMs) from three main types of attacks: poisoning attacks, evasion attacks, and privacy attacks. The authors collected 79 papers and analyzed the threat models, attack techniques, and corresponding defenses for each risk category.

October 21, 2024: Security of Language Models for Code: A Systematic Literature Review. This paper is authored by a team from Nanjing University State Key Laboratory for Novel Software Technology and Nanyang University anchored by Nanjing University professor XU Baowen (徐宝文). This survey reviews 67 papers and organizes them into attack strategies, defense strategies, empirical studies on security, and experimental setting/evaluation. It lists key challenges and opportunities in code LM security, including stealthiness of backdoor triggers, stealthiness of adversarial perturbations, explainability in defending against attacks, and balancing performance with robustness.

Other relevant technical publications

Shanghai Jiao Tong University and Baichuan Intelligent Technology, Flooding Spread of Manipulated Knowledge in LLM-Based Multi-Agent Communities, arXiv preprint, July 10, 2024.

Chinese Academy of Sciences, Cross-Modal Safety Mechanism Transfer in Large Vision-Language Models, arXiv preprint, October 16, 2024.

University of Science and Technology of China, Squirrel AI, Hong Kong Polytechnic University, NetSafe: Exploring the Topological Safety of Multi-agent Networks, arXiv preprint, October 21, 2024.

International Digital Economy Academy (IDEA), HKUST, Ohio State University, Guide for Defense (G4D): Dynamic Guidance for Robust and Balanced Defense in Large Language Models, arXiv preprint, October 23, 2024.

Peking University, SG-Bench: Evaluating LLM Safety Generalization Across Diverse Tasks and Prompt Types, arXiv preprint, October 29, 2024.

Alibaba Cloud, Zhejiang University, et al., Enhancing Multiple Dimensions of Trustworthiness in LLMs via Sparse Activation Control, arXiv preprint, November 4, 2024.

Huawei, Technical University of Munich, et al., World Models: The Safety Perspective, arXiv preprint, November 12, 2024.

Expert views on AI Risks

Party newspaper publishes article discussing AI agent safety

Background: On November 4, the People’s Daily (人民日报), the official newspaper of the Communist Party of China (CPC), published a full-page academic roundtable featuring three essays on AI oversight. The editor’s preface references the recent CPC Third Plenum resolution inclusion of AI safety and states that ensuring AI safety, reliability, and controllability is an important question that must be solved in AI development. One essay by Vice President of China University of Political Science and Law LIU Yanhong (刘艳红) focused on assessing risks at three different stages: pretraining, input, and output. Another by Nanjing Normal University professor GU Liping (顾理平) primarily discusses privacy. The last essay, by University of International Business and Economics School of Law Assistant Dean ZHANG Xing (张欣) features extensive discussion of AI agents.

Discussion of agents: Zhang’s essay emphasizes the risks of “frontier AI technologies” and states that AI agents are the most important AGI frontier research direction. She discusses challenges for AI agents, including hallucination and unfair decision-making, privacy leaks, unpredictable interactions of multi-agent systems, interaction in the physical world, and potential to manipulate user emotions. In discussing the risks of multi-agent systems, she draws analogy to stock market “flash crashes” driven by interactions between algorithms. To address these risks, one of her recommendations is for technical communities, including developers and operational personnel, to help supervise AI companies through mechanisms including “whistleblowing.”

Implications: This substantial discussion of AI agents in a Chinese party newspaper shows that expert discourse around implementing the Third Plenum resolution’s AI safety components is cognizant of frontier and forward-looking AI concerns, such as AI agents.

Another Chinese biosecurity expert writes on AI and bio risks

Background: The China Biology Economy Development Report 2024 published by a center under the National Development and Reform Commission (NDRC), China’s macro-economic planner, contained an essay discussing the convergence of AI and biological risks. The author was CHEN Bokai (陈博凯), a researcher at the China Foreign Affairs University (CFAU) Global Biosecurity Governance Research Center (外交学院全球生物安全治理研究中心). CFAU is administered under China’s Ministry of Foreign Affairs.

Discussion of AI x biological risks: The article argues that use of AI in biology reduces barriers to biological knowledge and greatly increases the risk of misuse of biotechnology. It analyzes risks in two main use cases: 1) LLMs in biology could increase accessibility of bioweapons by providing knowledge, plans, and acting as a lab assistant; 2) biological design tools (BDTs) can increase harm caused by bioweapons by enhancing lethality, likelihood of success, and assist in evading existing biosecurity protocols. The article claims that risks from LLMs in biology and BDTs are currently minor, citing work by organizations including RAND and OpenAI. At the same time, the author believes that AI will have far-reaching effects on global biosecurity in the long term, calling for international cooperation to create a governance framework for AI and biotechnology to prevent global biological catastrophe.

Implications: As Concordia AI’s State of AI Safety in China: Spring 2024 Report noted, researchers from the Tianjin University Center for Biosafety Research and Strategy and the Development Research Center of the State Council had already raised concerns about AI risks in biology. This new article shows that attention to the issue continues to grow in China’s biosafety and biosecurity community.

Shanghai AI Lab fleshes out views on balancing AI safety and development

Background: Shanghai AI Lab (SHLAB) director ZHOU Bowen (周伯文) and members of SHLAB’s Center for Safe & Trustworthy AI published a position paper on December 8 titled “Towards AI-45° Law: A Roadmap to Trustworthy AGI.” Zhou had introduced the “45-degree law” concept in speeches at the World AI Conference 2024 in July. Concordia AI has previously written on Shanghai AI Lab’s other AI safety technical work and evaluations work.

45-degree law: The authors argue that current AI development is “crippled” due to an imbalance between rapid capability growth and inadequate safety protocols. It argues for developing red lines for existential risks and yellow lines for early warning indicators. The paper posits three layers to achieve “Trustworthy AGI,” from the lowest “approximate alignment” layer (leveraging supervised fine-tuning and machine unlearning), a middle “intervenable” layer (scalable oversight, mechanistic interpretability), and a “reflectable layer” at the top with value reflection, a mental model, and autonomous safety specifications. It additionally defines five progressive levels of trustworthy AGI. Finally, the paper also outlines specific governance measures, including lifecycle management and treating AI safety as a global public good.

Implications: This position paper clarifies Shanghai AI Lab’s 45-degree law idea from the World AI Conference and could represent possible research directions the lab pursues on AGI safety. The emphasis on red lines and yellow lines for AI development show that Chinese AI developers are at least rhetorically committed to preventing extreme AI risks.

What else we’re reading

Jeff Ding ChinAI #280: Sour or Sweet Grapes? The U.S.'s Unstrategic Approach to the “Chip War,” ChinAI, September 9, 2024.

Henry Kissinger, Eric Schmidt, and Craig Mundie, War and Peace in the Age of Artificial Intelligence, November 18, 2024.

Garrison Lovely, China Hawks are Manufacturing an AI Arms Race, The Obsolete Newsletter, November 19, 2024.

Peter Guest, Inside the AI back-channel between China and the West, The Economist, November 29, 2024.

Concordia AI’s Recent Work

Concordia AI co-hosted panels on AI safety at the International AI Cooperation and Governance Forum 2024 in Singapore. For the full write up, see here.

Concordia AI published some of the first lengthy Chinese-language analyses about Frontier AI Safety Commitments and the global landscape of AI safety institutes.

FAR.AI posted a video recording of Concordia AI Senior Program Manager Kwan Yee Ng’s presentation on AI Policy in China at the Bay Area Alignment Workshop 2024.

Concordia AI CEO Brian Tse participated in the Sixth Edition of The Athens Roundtable on AI and the Rule of Law on December 9 at the OECD Headquarters in Paris, France.

Feedback and Suggestions

Please reach out to us at info@concordia-ai.com if you have any feedback, comments, or suggestions for topics for the newsletter to cover.