AI Safety in China #10

Chinese papers on multimodal model safety and multi-agent safety, US-China AI dialogue updates, government document on ensuring safety red lines, and new industry association projects

Key Takeaways

Chinese research groups published five new papers over 20 days offering contributions on multimodal model safety, multi-agent safety, and jailbreaking.

The China-US AI dialogue will hold its first meeting in the spring, and the US OSTP director indicated that the US is open to working with China on technical safety standards for AI.

A document issued by seven government departments on promoting future industries included a section on defining red lines and bottom lines for safety.

China’s AI Industry Alliance held a seminar on AGI risks for its Policy and Law working group, while its security and governance working group discussed a draft standard on safety of coding models.

Note: Due to the Chinese New Year holiday, the next issue of the newsletter will be published a week later than usual, during the week of February 26.

International AI Governance

Planning for China-US intergovernmental talks on AI progresses

Background: During the Asia-Pacific Economic Cooperation summit in November 2023, China and the US agreed to talks on AI. The specific details have remained unclear, but developments over the past few weeks shed greater light on the potential talks.

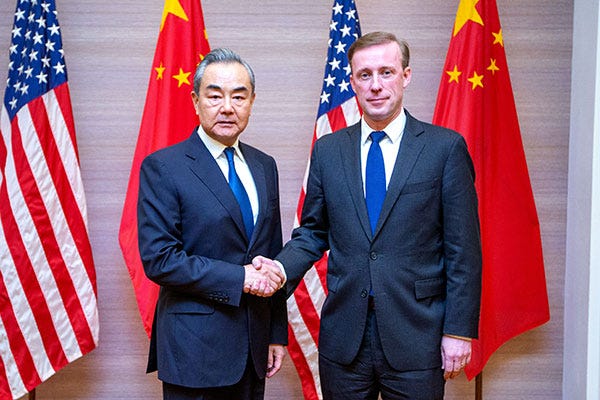

Wang Yi-Sullivan meeting: On January 26 and 27, Chinese Politburo member and Director of the Office of the Central Commission for Foreign Affairs WANG Yi (王毅) met with US National Security Advisor Jake Sullivan in Thailand. Both readouts stated that the first round of China-US AI dialogue would occur sometime in the spring. A US-side briefing also noted that both sides discussed “emerging challenges such as safety and risks posed by advanced forms of AI,” and Sullivan stated in a follow-up speech that “fix[ing] the launch of this AI dialogue” was one of the “main things coming out of the meeting.”

White House advisor on AI safety cooperation: Before the Wang Yi-Sullivan meeting, White House Office of Science and Technology Policy (OSTP) Director Arati Prabhakar stated that the US is taking steps to work together with China on AI safety. She noted that “there will be places where we can agree” such as global technical and safety standards for AI software.

Chinese researchers on AI dialogue with the US: Two researchers from Tsinghua University’s Center for International Security and Strategy (CISS) also published an article on this topic. We have previously covered CISS’s work on AI in issue #8. The article analyzed areas of consensus and disagreement between China and the US on AI. The authors called for supporting multilateral dialogue through the United Nations and AI Safety Summits, increasing civil society and industry dialogue, and quickly determining the dialogue mechanism, agenda, and participants. In particular, they supported discussing AI terms and concepts, talent cultivation or exchanges between experts, and applications of AI in global governance problems such as climate change.

Implications: These developments show that momentum is building behind China-US dialogue on AI. However, there may still remain uncertainties around the agenda and participants, which could hinder effective dialogue given the breadth of AI’s applications and relevance. Confirming those topics between both governments will be essential to speedily setting up a productive dialogue, and transnational threats resulting from advanced AI development are likely a strong place to start.

Domestic AI Governance

Government document on future industries discusses safety red lines

Background: On January 31, the Ministry of Industry and Information Technology (MIIT) and six other ministries released “Implementation Opinions on Promoting the Innovative Development of Future Industries.” The document outlines a set of future industries to receive greater focus, split up into six directions: future manufacturing (e.g. nanotechnology, lasers, intelligent manufacturing), future information (e.g. next generation telecoms, quantum information, brain-inspired intelligence), future materials, future energy, future space (e.g. space, deep sea), and future health (e.g. synthetic bio). Most of the document focused on measures to promote these industries.

Safety and governance: The document also devoted small portions to safety and governance. It called for comprehensive development of safety and governance by 2025. It also discussed strengthening safety and governance in Section 5, emphasizing greater research on ethical norms and scientifically defining “red lines” and “bottom lines.” It additionally mentioned improving safety monitoring, early warning analysis, and emergency measures to reduce risks of frontier technological applications.

Implications: While AI is not the focus of this document, as with a previous document establishing the S&T ethics review process, AI will still likely be heavily emphasized. The language in this document highlights that the Chinese government is remaining cognizant of the frontier risks of technologies including AI and intends to ensure that red lines are not crossed – while at the same time promoting their development.

AI Industry Alliance pursues additional AI safety and security projects

Background: China’s AI Industry Alliance (AIIA), which has been covered in issues #4 and #8 of this newsletter, has another working group looking at AGI risks and is also exploring security for AI models used for coding purposes.

Meeting on AGI risks: On January 21, AIIA’s Policy and Law working group and the CAICT Policy and Economics Research Office held a seminar on AGI risks and legal rules.1 The group is co-chaired by ZHANG Linghan (张凌寒), a member of the UN High-Level Advisory Board for AI, has an additional expert advisory group led by Dean of the Institute for AI International Governance of Tsinghua University (I-AIIG) XUE Lan (薛澜), and includes a US researcher from Yale as a deputy group leader. Speakers discussed issues including AGI ethics issues, factors in the creation of China’s AI Law, and cutting-edge AI alignment work.

Update on S&T ethics working group: On January 23, AIIA’s Science and Technology (S&T) ethics working group, which was announced on December 23, held its first full meeting, with representatives from government-affiliated think tanks, universities, and industry. One working group member, ZHANG Quanshi (张拳石), discussed technical paths for solving explainability issues in large models, and attendees discussed other ethics issues.

New work by safety and security governance group: On February 2, AIIA held a meeting on security and risk prevention for coding large models, with 60+ attendees from various research institutions and companies. During the meeting, CAICT introduced a draft standard for assessing safety and risks of coding large models, with a plan to complete the standard in May and begin conducting assessments. The meeting readout does not explicitly discuss the risk of misuse of coding models for cyberattacks. The working group also will hold a meeting on February 20 to discuss a new large model safety benchmark test it is developing named SafetyAI Bench.

Implications: AIIA’s work on frontier AI risks now spans three working groups: the Safety and Security Governance working group, the S&T Ethics working group, and the Policy and Law working group. It is not yet fully clear how these working groups will divide work on frontier AI risks. However, the fact that they have all indicated interest in addressing frontier safety issues points to a high level of overall interest in this topic by AIIA. The presence of a foreign expert from Yale in the Policy and Law working group also seems to indicate that AIIA has interest in international dialogue.

Chinese Academy of Social Sciences creates lab for AI governance and safety

Background: The Chinese Academy of Social Sciences (CASS) is a prominent and influential state-overseen think tank. Concordia AI previously translated and analyzed a CASS-led expert draft of the “AI Law (model law)” in August 2023.

New lab announcement: CASS announced a new AI Safety and Governance Research Lab as an incubated lab under its Key Laboratory for Philosophy and Social Sciences. The initiator of the new lab is ZHOU Hui (周辉), who led the drafting of the AI Law (model law). CASS Institute of Law dean MO Jihong (莫纪宏) gave remarks at the opening ceremony of the lab. He called for the lab to focus on frontier AI developments and research scientific governance and high quality development of AI. He urged the lab to conduct cross-domain research, involving law and other disciplines. He also called for the lab to pursue international dialogue with foreign experts, scholars, and industry, particularly from the US and Europe. Concordia AI CEO Brian Tse was invited to attend and give remarks at the opening ceremony for the lab.

Implications: The previous work of relevant CASS scholars, such as Zhou Hui, included efforts to address frontier AI risks. Given its close government ties, CASS was already well-positioned to influence Chinese AI policy. This new lab, concentrated on governance and frontier issues, increases CASS’s influence in AI and elevates safety-conscious voices.

Technical Safety Developments

Personal LLM Agents: Insights and Survey about the Capability, Efficiency and Security

January 10: This preprint was led by researchers from Tsinghua’s Institute for AI Industry Research (AIR), including AIR Director and former Baidu President ZHANG Ya-Qin (张亚勤) and AIR Principal Investigator LIU Yunxin (刘云新). The paper focuses on “Personal LLM Agents,” which the authors describe as “LLM-agents integrated with personal data and personal devices,” analyzing them in terms of intelligence, efficiency, and security. Based on the L1-L5 framework for autonomous driving intelligence levels, the researchers outline intelligence levels of Personal LLM Agents into five levels. The security and privacy section of the paper includes confidentiality, integrity, and reliability. The confidentiality section focuses on preserving privacy. The integrity section analyzes defense against attacks including adversarial attacks, backdoor attacks, and prompt injection attacks. The reliability section discusses using alignment, self-reflection, verification mechanisms, and model “intermediate layer” analysis to reduce hallucinations and improve coherence outside of training data.

PsySafe: A Comprehensive Framework for Psychological-based Attack, Defense, and Evaluation of Multi-agent System Safety

January 22: This preprint was authored by researchers from Shanghai AI Lab (SHLAB), University of Science and Technology of China (USTC), and Dalian University of Technology, led by assistant to the director of SHLAB and Shenzhen Institutes of Advanced Technology professor QIAO Yu (乔宇) as well as SHLAB researcher scientist SHAO Jing (邵婧). The paper examines safety issues in multi-agent systems from the perspective of agent psychology. The researchers injected agents with “dark traits” that increased tendency of agents towards dangerous behavior. Agents were tasked with pursuing dangerous tasks, such as instructions for stealing someone’s identity, developing a computer virus, and writing a script to exploit software vulnerabilities. Danger was assessed in terms of cases where only one agent (process danger) versus all agents (joint danger) exhibited the dangerous behavior. They find that their attack method can effectively compromise multi-agent systems. The researchers also explore three key defense mechanisms: input defenses, psychological defense, and role defenses.

Red Teaming Visual Language Models

January 23: This preprint is by authors from the University of Hong Kong (HKU) and Zhejiang University, led by HKU Assistant Professor LIU Qi. The paper tests how vision-language models (VLMs) perform against red teaming. The authors create a red teaming dataset of 5,200 questions that evaluates VLMs in terms of faithfulness, privacy, safety, and fairness. The safety category includes 1,000 questions covering topics such as politics, race, decrypting CAPTCHAs, and multimodal jailbreaks. The test found that most VLMs struggled to recognize textual content and thus were less capable of jailbreaking or CAPTCHAs, while the LLaVA models were more susceptible to these unsafe behaviors.

From GPT-4 to Gemini and Beyond: Assessing the Landscape of MLLMs on Generalizability, Trustworthiness and Causality through Four Modalities

January 26: A team from Shanghai AI Lab (SHLAB) published a lengthy (300 page long) preprint on multi-modal large language models (MLLMs). Members of the team include assistant to the director of SHLAB and Shenzhen Institutes of Advanced Technology professor QIAO Yu (乔宇) as well as Text Trustworthy Team Co-lead WANG Yingchun (王迎春). The paper studied the generalizability, trustworthiness, and causal reasoning capabilities of MLLMs across text, code, image, and video. In the trustworthiness category, they examined safety, including “extreme risks,” as well as robustness, morality, and several other categories.The safety section for text explored risks around synthesis or recommendations on hazardous compounds. For code, the safety section discussed risks of models to generate code that could lead to “extreme and widespread risks” including security breaches or system failures. The image section tested safety in terms of the ability of MLLMs to recommend how to build weapons or conduct hacking. Meanwhile, the video section also explored safety through MLLMs’ ability to reproduce weapons or plan criminal activities.

A Cross-Language Investigation into Jailbreak Attacks in Large Language Models

January 30: This preprint was authored by researchers from USTC and Nanyang Technological University in Singapore. The team was led by USTC Research Professor XUE Yinxing (薛吟兴), who focuses on software security. The paper explored multilingual jailbreak attacks, where jailbreak attempts are conducted in multiple languages. The paper conducts an empirical study on these methods, creating a multilingual jailbreak dataset by translating malicious English questions into eight other languages, ending up with 365 multilingual question combinations. The researchers conducted further research to interpret the outcomes of the jailbreaks, finding that “the spatial distribution of LLM representations aligns with attack success rates across different languages.” They conducted fine-tuning using the Lora method to enhance LLM defenses against multilingual jailbreak attacks.

Implications

Chinese technical AI safety papers continue to show advancements in the quantity and quality of work. The papers collected above, published in a span of 20 days, provide novel contributions on topics including multimodal model safety, multi-agent safety, and jailbreaking. Hopefully, this trend leads to greater collaboration between foreign and Chinese labs to further accelerate safety research.

Expert views on AI Risks

Popular Chinese magazine publishes feature on safety and alignment

Background: Lifeweek Magazine (三联生活周刊), a Chinese weekly society and culture magazine analogized to Time Magazine, published an issue focused on AGI and AI safety. Eight out of the approximately 20 articles in the issue were on AI topics, including several on AGI, superalignment, or safety. The opening story noted the importance of alignment for reducing potential harms from AI and also called for governments around the world to quickly arrive at a consensus on supervising advanced AI. Another article interviewed Peking University (PKU) Professor YANG Yaodong (杨耀东), the executive director of PKU’s Center for AI Safety and Governance and head of the PKU Alignment and Interaction Research Lab, whose work we have previously covered in issues #1 and #4.

Professor Yang’s interview: Professor Yang discussed topics including the history of alignment research, international AI governance, and risks from AI. He called for a “socio-technical” approach to alignment, noting that the task of value alignment involves both extracting human values and then aligning to those values. This requires expertise from the social sciences, humanities, philosophy, economics, etc. However, this creates a challenge in that there is no single standard for human values, and different countries have different understandings of this term. In his view, international AI governance will also face difficulties because countries may have different strategies on AI for applications in the military realm, similar to nuclear weapons. Yang also discussed the potential for AI to pose an existential risk, which he described as a mainstream view in academia, referencing the Center for AI Safety’s Statement on AI Risk. He noted that he is also researching “superalignment,” but that there not yet clarity on how to align super-intelligent entities.

Implications: This article highlights that Chinese educated society is discussing ideas around AI safety and catastrophic risks. Professor Yang’s background is primarily technical, but his position at the PKU Center for AI Safety and Governance indicates he may also intend to work on technical governance issues. His statements also highlight that despite a common view around the world on the necessity of facing frontier AI risks, there is not yet consensus on how to decide which human values AI models are aligned to.

What else we’re reading

Jeffrey Ding, ChinAI #253: Tencent Research Institute releases Large Model Security & Ethics Report, February 5, 2024.

ZENG Yi (曾毅) et al., The Center for Long-term Artificial Intelligence (CLAI) and the International Research Center for AI Ethics and Governance hosted at Institute of Automation, Chinese Academy of Sciences, AI Governance International Evaluation Index (AGILE Index), February 4, 2024.

Concordia AI’s Recent Work

Concordia AI Senior Research Manager Jason Zhou, Senior Program Manager Kwan Yee Ng, and CEO Brian Tse wrote an op-ed in The Diplomat calling for greater cooperation with China on frontier AI safety and governance.

Concordia AI Senior Governance Lead FANG Liang participated in a roundtable discussion on open-source model governance in China’s AI law at an event co-hosted by Tsinghua University’s Institute for AI International Governance.

Feedback and Suggestions

Please reach out to us at info@concordia-ai.com if you have any feedback, comments, or suggestions for topics for the newsletter to cover.

For more on the China Academy of Information and Communications Technology (CAICT) Policy and Economics Research Office, see our previous coverage of their Large Model Governance Blue Paper Report.